Understanding On Uni-Modal Feature Learning in Supervised Multi-Modal Learning

Have you ever wondered how machines can understand and process information from multiple sources? The answer lies in a fascinating field known as multi-modal learning. In this article, we will delve into the concept of on uni-modal feature learning, a crucial aspect of supervised multi-modal learning. By the end, you’ll have a comprehensive understanding of how this technique works and its significance in the world of artificial intelligence.

What is Multi-Modal Learning?

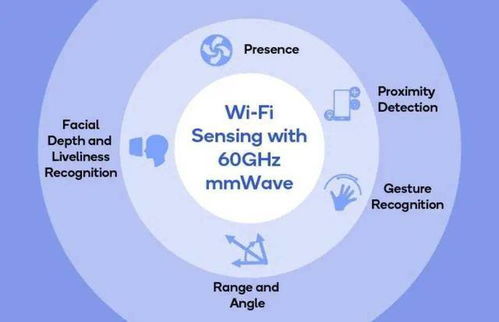

Multi-modal learning is a branch of artificial intelligence that focuses on the integration of information from different sources, such as text, images, and audio. The goal is to enable machines to understand and process information in a more human-like manner, by combining insights from various modalities.

Understanding Uni-Modal Feature Learning

Uni-modal feature learning is a technique that involves extracting and representing features from a single modality. In the context of multi-modal learning, this means focusing on one type of data source, such as text or images, to gain insights into the underlying patterns and relationships within that modality.

The Importance of On Uni-Modal Feature Learning

On uni-modal feature learning plays a crucial role in supervised multi-modal learning for several reasons:

-

It allows for a more focused analysis of each modality, enabling deeper understanding of the individual data sources.

-

It can improve the performance of multi-modal learning models by providing a solid foundation for feature representation.

-

It can help in addressing the challenges of data imbalance and heterogeneity that often arise in multi-modal datasets.

Applications of On Uni-Modal Feature Learning

On uni-modal feature learning has found applications in various domains, including:

-

Image recognition: By focusing on image features, machines can better understand and classify images.

-

Text analysis: By extracting and representing text features, machines can gain insights into the content and context of textual data.

-

Speech recognition: By analyzing audio features, machines can improve their ability to understand and transcribe spoken language.

Challenges and Limitations

While on uni-modal feature learning has proven to be a valuable technique, it also comes with its own set of challenges and limitations:

-

It may not capture the full complexity of multi-modal interactions, as it focuses on a single modality.

-

It can be computationally expensive, especially when dealing with large datasets.

-

It may not be suitable for all types of multi-modal learning tasks, as some tasks require a more holistic approach.

Techniques for On Uni-Modal Feature Learning

Several techniques can be employed for on uni-modal feature learning, including:

-

Deep learning: By using neural networks, machines can automatically learn and represent features from the data.

-

Dimensionality reduction: Techniques like Principal Component Analysis (PCA) and t-SNE can be used to reduce the dimensionality of the data, making it easier to analyze.

-

Feature engineering: By manually designing and selecting features, we can improve the performance of our models.

Real-World Examples

Let’s take a look at a few real-world examples where on uni-modal feature learning has been applied:

| Application | Modality | Technique |

|---|---|---|

| Image classification | Images | Convolutional Neural Networks (CNNs) |

| Sentiment analysis | Text | Word Embeddings and Recurrent Neural Networks (RNNs) |

| Speech recognition | Audio | Mel Frequency Cepstral Coefficients (MFCCs) and Deep Belief Networks (DBNs) |